We propose CLIP-Head, a novel approach towards text-driven neural parametric 3D head model generation.

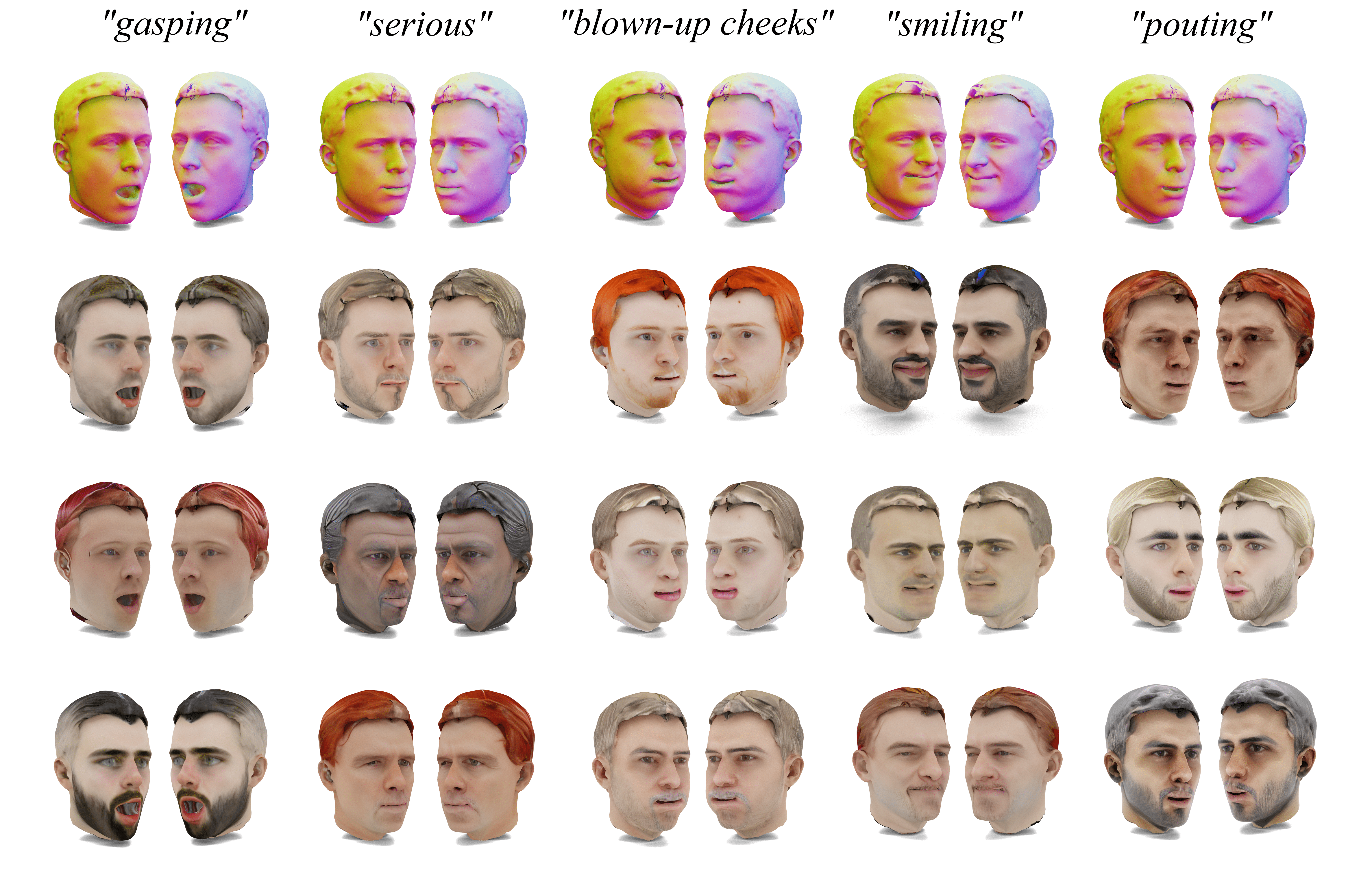

Our method takes simple text prompts in natural language, describing the appearance & facial expressions, and generates 3D neural head avatars with accurate geometry and high-quality texture maps.

Unlike existing approaches, which use conventional parametric head models with limited control and expressiveness, we leverage Neural Parametric Head Models (NPHM), offering disjoint latent codes for the disentangled encoding of identities and expressions.

To facilitate the text-driven generation, we propose two weakly-supervised mapping networks to map the CLIP’s encoding of input text prompt to NPHM’s disjoint identity and expression vector.

The predicted latent codes are then fed to a pre-trained NPHM network to generate 3D head geometry.

Since NPHM mesh doesn’t support textures, we propose a novel aligned parametrization technique, followed by text-driven generation of texture maps by leveraging a recently proposed controllable diffusion model for the task of text-to-image synthesis.

Our method is capable of generating 3D head meshes with arbitrary appearances and a variety of facial expressions, along with high-quality texture details.